Human Detection from Drone using You Only Look Once (YOLOv5) for Search and Rescue Operation

DOI:

https://doi.org/10.37934/araset.30.3.222235Keywords:

Human detection, object detection, deep learning, drone search and rescueAbstract

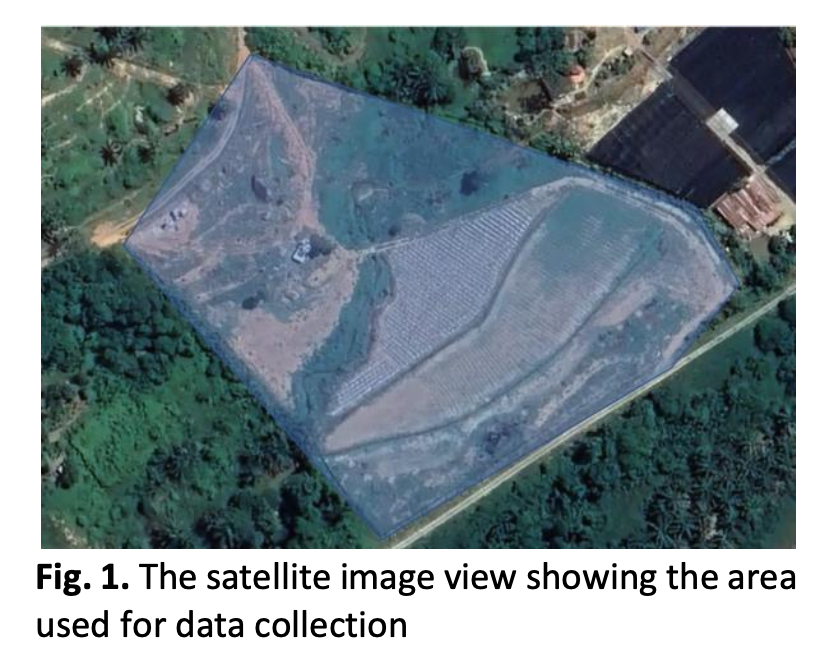

Drones are unmanned aerial vehicles that can be remotely operated to perform a variety of tasks. They have been used in search and rescue operations since the early 2000s and have proven to be invaluable tools for quickly locating missing persons in difficult terrain and environment. In certain cases, automated human detection on drone camera feed can help the responder to locate the victims more effectively. In this work, we propose the use of a deep learning method called You Only Look Once version 5, or YOLOv5. The YOLOv5 model is trained using data collected during a simulation of search and rescue operations, where mannequins were used to represent human victims. Video was acquired using DJI Matrice 300 drone with Zenmuse H20T camera which flew around an area with various terrains such as farms, ravines, and river of more than 15,000 m2, at a height of 40 meters. The drone used grid, circular and zigzag flying patterns, with three different levels of camera zooms, and the data was captured on different days and times. The total duration of the video collected at 1080p@30fps is 148 minutes 26 seconds. Five pretrained models of YOLOv5 with different complexities were trained and tested using this dataset. Results showed that pretrained yolov5l6 model delivered the best precision, recall and mAP50 rate at 0.668, 0.303 and 0.346 respectively. Besides, the experiment also showed that we can improve the overall performance by using images acquired at 6x zoom magnification level where precision, recall, and mAP50 rate are increased to 0.846, 0.543, and 0.591 respectively. yolov5l6 model also delivered an acceptable inference time of 43ms per 1920x1080 resolution image, thus it can run at a respectable 23fps.