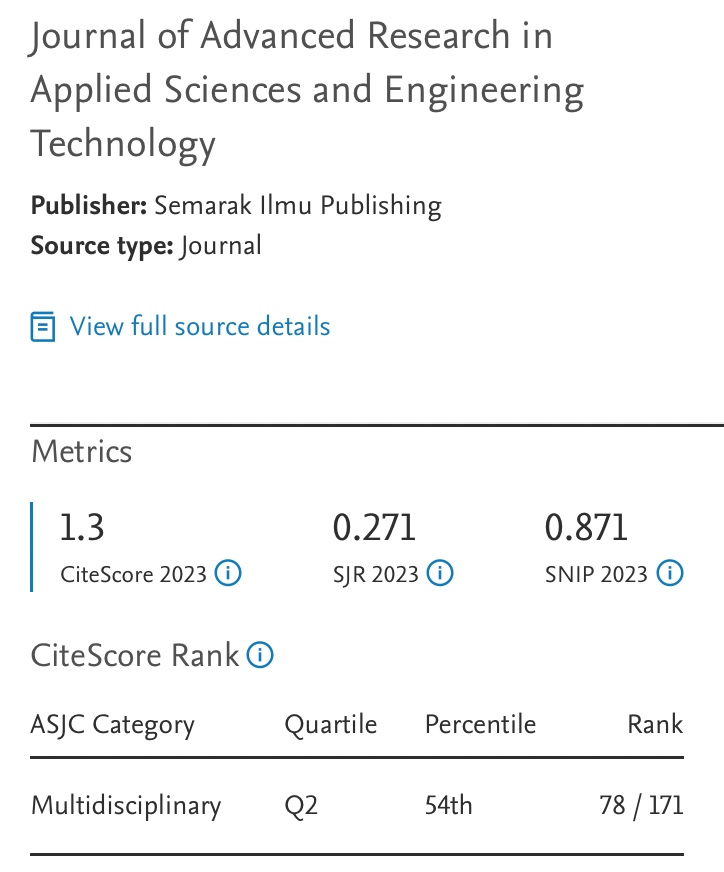

Android-Based App Guava Leaf Diseases Identification using Convolution Neural Network

DOI:

https://doi.org/10.37934/araset.57.1.7388Keywords:

Guava leaf disease, CNN, Deep learning, Transfer-learning, IncepationV3Abstract

Guava is an economically significant fruit crop in many regions. Conventional plant disease identification depends on skilled workers visually inspecting samples, which can be laborious and error-prone. Therefore, the goal of this study is to create an Android app that uses InceptionV3 to precisely determine the health of guava plants, with an emphasis on hyperparameter tuning and ideal model selection. Inception V3 was chosen as the base model for this study as it outperformed the 25 pre-trained models from Keras API. The Guava leaves dataset consists of 120 raw images collected from real-time capture and the Google website. The images belong to four categories: Algal Spot, Red Rust, Whitefly, and Healthy leaf. The dataset was split into 60% for training and 40% for validation. To increase the number of images, augmentation was performed to multiply each training by 5 times. The 2048 features were extracted from the second last layer of Inception V3. These features were saved in the Numpy format to facilitate hyperparameter tuning. The best hyperparameter tuning results were achieved with a batch size of 4, a learning rate of 0.001, and the Adamax optimizer for 50 epochs, resulting in a validation accuracy of 0.99. This set of hyperparameters was used to train the model, yielding in a training accuracy of 0.98 and a validation accuracy of 0.9. The trained model was exported in the tflite file format and integrated into an Android app developed using Android Studio. To evaluate the performance evaluation of the app, 15 unseen images per class were used and the app achieved an overall F1-Score of 0.85, a recall of 0.85, a precision of 0.89, and a test accuracy of 0.85.