BiasTrap: Runtime Detection of Biased Prediction in Machine Learning Systems

DOI:

https://doi.org/10.37934/araset.40.2.127139Keywords:

Fairness Testing, Runtime Verification, Bias Detection, Machine Learning SystemsAbstract

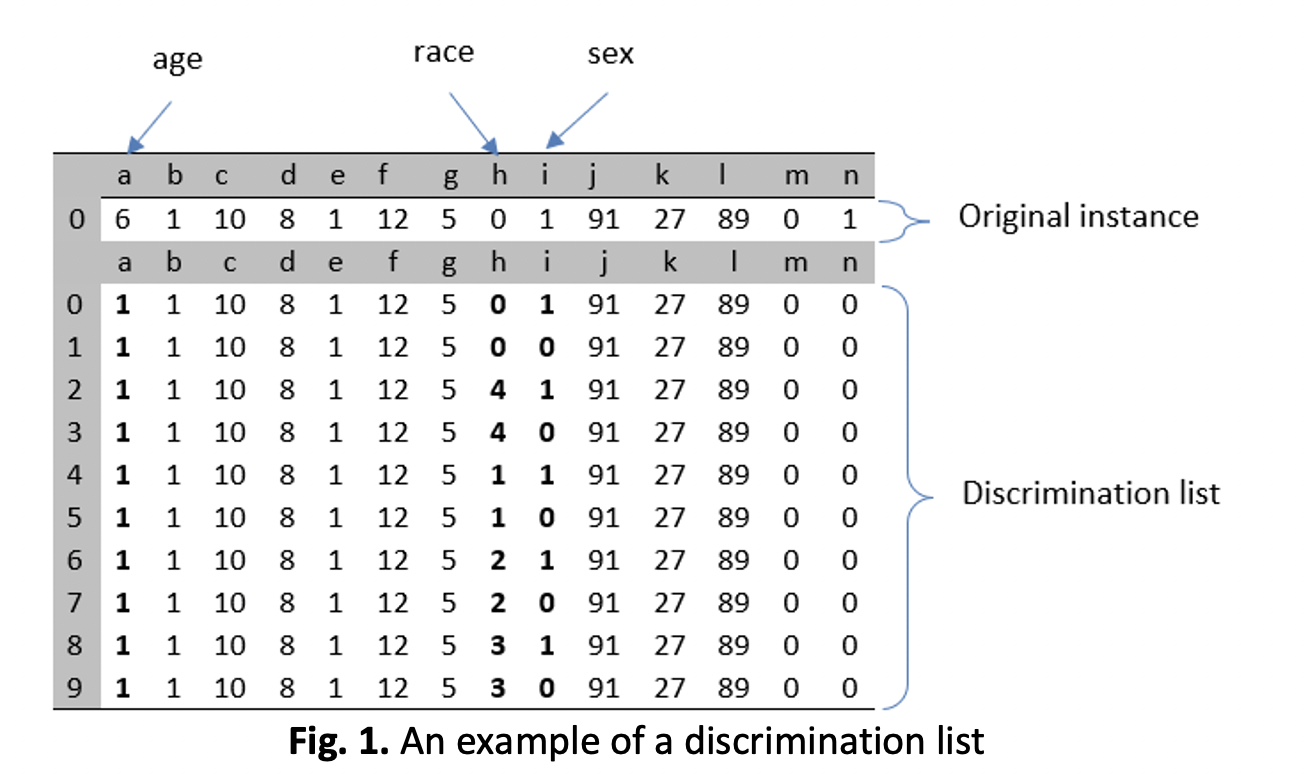

Machine Learning (ML) systems are now widely used across various fields such as hiring, healthcare, and criminal justice, but they are prone to unfairness and discrimination, which can have serious consequences for individuals and society. Although various fairness testing methods have been developed to tackle this issue, they lack the mechanism to continuously monitor ML system behaviour at runtime. In this study, a runtime verification tool called BiasTrap is proposed to detect and prevent discrimination in ML systems. The tool combines data augmentation and bias detection components to create and analyse instances with different sensitive attributes, enabling the detection of discriminatory behaviour in the ML model. The simulation results demonstrate that BiasTrap can effectively detect discriminatory behaviour in ML models trained on different datasets using various algorithms. Therefore, BiasTrap is a valuable tool for ensuring fairness in ML systems in real-time.

Downloads